Graphic: CNN

The post-truth age is upon us, and the far-right has wasted no time in embracing it.

What happened?

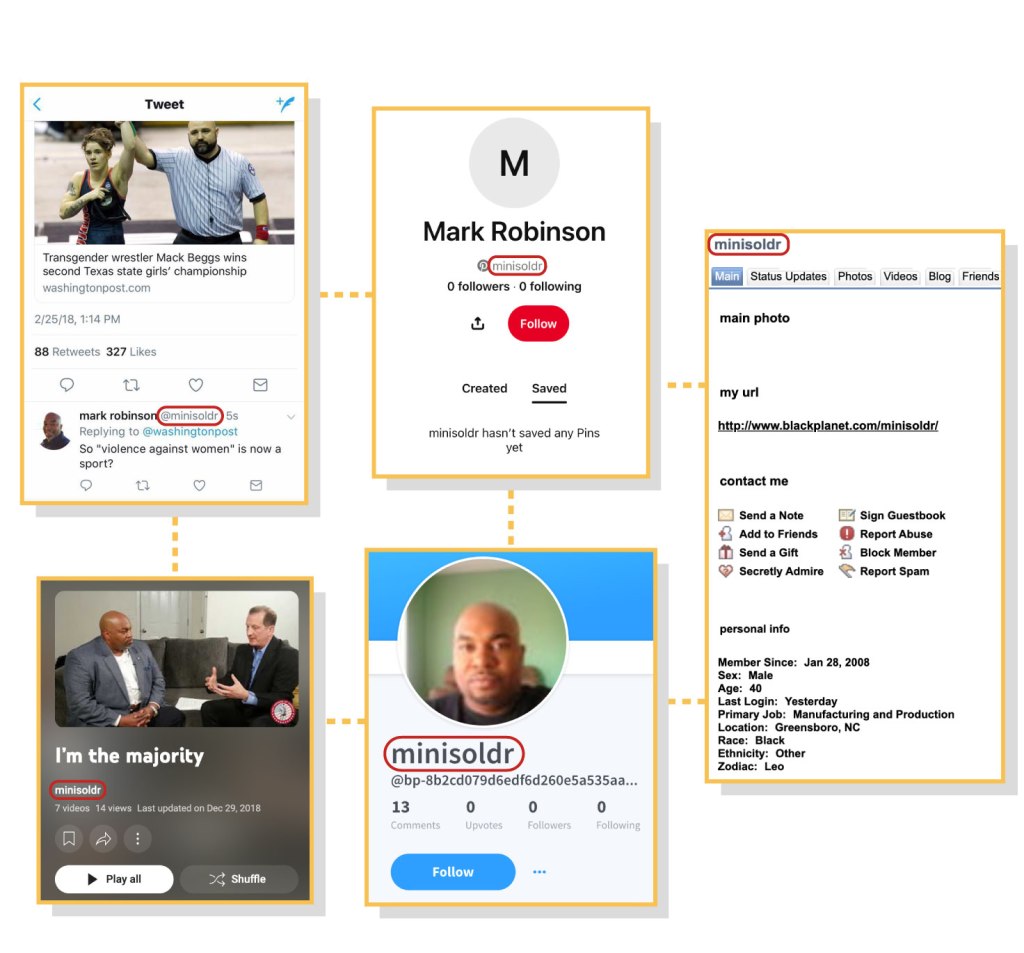

On Thursday Sept 19, CNN published a bombshell report highlighting a number of statements and comments made by Mark Robinson, the North Carolina GOP nominee for governor. Needless to say this report was damning. By matching Robinson’s username and other available information, CNN was able to link the GOP nominee to a number of troubling posts made in the last 15 years.

These posts included Robinson self-identifying as a “black NAZI!”, expressing support for slavery, allegedly admitting to “peeping” on women in public showers, and a host of other equally troubling statements.

The evidence linking Robinson to these posts is extensive – see the full CNN report for details.

Robinson’s response to all of this? It wasn’t me, it was AI! In an interview with CNN reporter Andrew Kaczynski following the publication of the investigation, Robinson said that “…somebody manufactured these salacious tabloid lies, but I can tell you this: There’s been over one million dollars spent on me through AI by a billionaire’s son who’s bound and determined to destroy me.”

AI can do a great many things now, but it cannot rewrite the past. Given that the posts which CNN uncovered were made between 2008 and 2012, well before the age of AI we now live in, Robinson’s claims of AI tempering are almost certainly false.

Denial of evidence is an unfortunately typical response from a Republican embroiled in controversy nowadays, but this AI angle is new. Let’s dive into it.

Corrosive AI is a double edged sword.

Corrosive AI is a double edged sword. We know that AI can be used to prop up false claims, but it can also be used to cast doubt on true ones. Mark Robinson’s response to his posting history being dragged into the light shows us this.

We saw a similar instance of this phenomenon earlier this summer, with with Donald Trump and Laura Loomer claiming that the Harris campaign was using AI to generate imagery of large crowds at rallies (see my previous post about this). These claims were, shockingly, false. However, many have since speculated (myself included) that using AI to deny reality may be a way of laying the groundwork for another denial of election results, like we saw in 2020.

How Mark Robinson’s story plays out may give of some indication of the extent to which AI is corroding our collective trust – trust in politics, trust in the media, and trust in the medium of digital media. How Robinson fares in November won’t prove or disprove anything definitively. That being said, if the “black Nazi” is able to shake off this controversy and clinch an electoral win, it may be an indication that political trust is rapidly corroding.

How do we handle this?

Obviously there’s no easy solution to this issue. AI tech appears to be here to stay, and with that come risks. I’ve detailed some of the risks associated with generative AI technology before (see the Corrosive AI Thesis), namely that it can be misused to manufacture evidence to support false claims. What’s interesting about Mark Robinson’s story is that generative AI tech was seemingly never involved – only scapegoated.

So long as generative AI tech is available, anyone can claim that damning or embarrassing content about them is fake. I suspect this also applies to incriminating evidence, and I think it’s only a matter of time until we see this issue take the national stage.

So what to do? Regulation of synthetic content would certainly be a good start – California’s new legislation cracking down and sexually explicit deepfakes and requiring AI watermarking is a good example. This also brings up the idea of a other potential technological solution to AI issues. Could actors so easily claim that damning evidence is AI generated if there were a handful of AI content detection tools available to the public? Would false claims of AI tempering with images and videos be as convincing if the majority of AI generated content was watermarked?

I think technological solutions like these would help in identifying when generative AI is being used to support false claims, but the issue of “crying AI” like Mark Robinson has remains. So long as generative AI is widely available, I suspect there will always be some doubt cast upon the authenticity of digital evidence.